If DevOps, Why Not QASec?

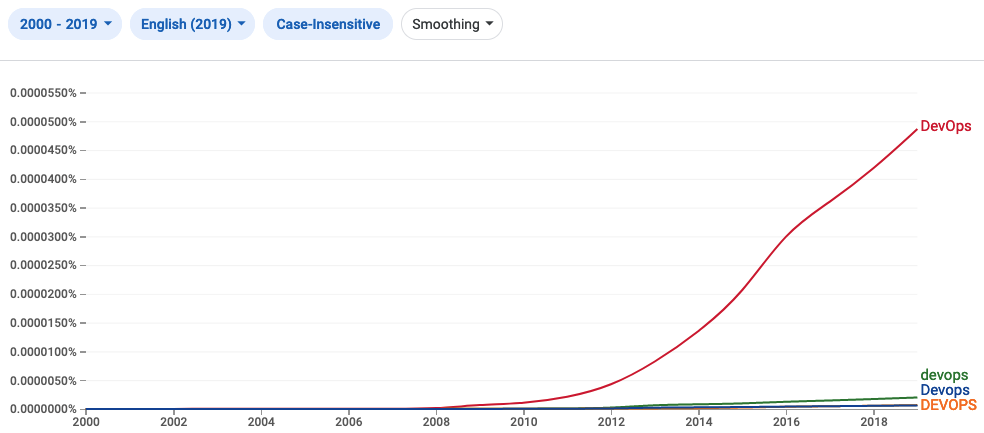

Over the last decade the term DevOps has entered the software development lexicon. While the term like most tech buzzwords has been abused to label just about everything for marketing purposes, I'd like to lay out what it means to me, why that matters, and what I think a future could look like with a new role.

What is DevOps?

There are some to whom the concept of DevOps is a role within the organisation distinct from that of ordinary developer and essentially the same as the SysAdmins that came before it. Some claim it's because the role emphasises writing more code, but SysAdmins always were, so I don't understand why this usage persists.

My definition is fairly simple,

If you build it, you get to deal with it at three in the morning if it falls over.

The concept of DevOps, at least to me, is the realisation that the idea of specialising developers and operators was mainly a historical artifact of the price per unit of compute at the time. It's the realisation that by having developers who also operate what they develop you end up with a more reliable product, often with a faster time to market. This is caused by reducing the communication overhead in divided roles and eliminating any sort of delay or gate keeping on the part of an operator.

As parenthetical, I will note that the gate keeping tendency is somewhat justified as they become responsible for all the mistakes of the developer. If the code is poorly written, the developer doesn't have to wake up at all hours to deal with it. As a result, the operator is trying to minimise that by driving home good practices that tend to reduce this pain. I can't excuse what that gate keeping does to organisational velocity, only note a reason it exists. A reason that largely goes away if those taking risks are responsible for them.

Many people evangelise DevOps because it empowers developers to provision and administer the resources they need, while also holding them responsible for the reliability and cost of what they build. It's a strategy I quite enjoy as a developer. No more week-long provisioning tennis matches with IT admins. Goodbye, half-baked internal self-service/ticketing nonsense. Bon voyage janky communal infrastructure. Now we can focus on what actually needs to get done to deliver value.

If uniting those two roles worked well, why not more?

The Ultimate Generalist

There's been a number of people who think further reducing specialisation within an organisation is a good idea. I both support and discourage this line of reason. Should developers be experts in all areas of computing? They can darn well try. I do. Deliberate ignorance is a fairly worrying sign to me. Spend the time and money to let your employees learn as much as they can. Education is a compounding productivity multiplier.

On the other hand, expecting a new hire to know everything is not a reasonable hiring requirement. Not even if you expect to only hire people with a few decades of experience and pay them an unworldly rate. Additionally, how do you think anyone is going to reach that level of competence? Osmosis? No, you always get to train whoever you hire. You may not like that, but you get to anyway. Doesn't matter what a hire knows, they don't know your business.

There is a reason we specialise though. Specialisation tends to increase productivity. You tend to be more productive the more times you have done what you are about to do. As you further specialise you begin to understand ways to improve the process. You find ways to save time or create novel value. This is why practice is so important.

Combining developers and operators can beat this trend of hiring, an ultimate generalist.

Splitting it up creates a moral hazard and communication impedance without providing the needed

independence of concern. Software systems that are constantly changing are hard to learn.

Understanding the changes as the person who created them is drastically simpler in software than

understanding what someone else created. This is why as systems stabilise it can often make more

sense to move back to specialising operators from developers again. It's also why the humble

SysAdmin, who configures and manages off the shelf

software, hasn't gone away with the rise of DevOps.

The QA Backslide

I've become a bit annoyed that talented and valuable quality assurance roles have slowly been disappearing at companies I've worked for and somewhat generally through the industry. It's a pattern I've been seeing and think has to stem from some sort of survivorship bias.

Survivorship bias for those who've never heard the term, refers to the tendency to only evaluate data that has managed to be collected after some (often hidden) elimination function has been applied to it.

The classic example of survivorship bias comes from World War II where Abraham Wald was trying to minimise aircraft bomber losses to enemy fire. He and his colleagues at Columbia University examined the damage done to aircraft that had returned from missions and recommended adding armor to the areas that showed the least damage. The bullet holes in the returning aircraft represented areas where a bomber could take damage and still fly well enough to return safely to base. The bias would be to conclude the opposite by seeing all the holes as places to armour in order to prevent damage.

In a sort of similar bias, I think managers see QA constantly letting bugs make it through to production. Unfortunately, this will always be the case thanks to a cruel combination of the halting problem and Gödel's incompleteness theorems. Correctness will always be a statistical process. Yet it seems they reason that their QA people are either ineffective or (at best) an optional part of the business' cost centre. In attempting to reduce the cost of developing software, QA looks like an easy redundancy to eliminate.

After all, why can't developers QA their own work? The reason is the same reason so much software is insecure.

The process of getting something to work is completely different from trying to find out how it breaks. The mental processes involved are, at best, superficially similar in that any kind of problem solving is similar. By that logic, let the developers figure out the company's market fit or reporting structure. These are just problems to be solved after all.

More reasonably, I propose we can and should begin to embrace that quality and security go hand in hand. Companies are starting to take IT security seriously given the increasing legal liability. Regulatory requirements have finally begun to gain teeth. Market externalities such as these were always going to require political intervention. It's why you've likely seen real investment in software accessibility where you work over the last few years. It's becoming illegal not to. Now here, I propose it could be possible to use this to begin hiring a role I'd like to call QASec.

What is QASec?

QASec is a better outcome than QA Automation Developers (what some companies seem to think they should hire). The idea is to bring together the roles that love breaking software. Really great QA is a boon to any organisation. I've worked with some extremely talented QAs. They worked to automate the easy stuff by static analysis, automated tests for routine runtime issues, and even ran some rudimentary fuzzing. I want more of that, but coupled with deep knowledge of how to break what I write. I want a security researcher to rip my code apart (and I consider myself somewhat adept at security auditing code).

If you think the role of QA is just mashing buttons in the UI or following some predefined list of things to do with the application every time you change it, you've either a) been really unkind to your QAs or b) haven't seriously thought about the possibilities a QA can provide your organisation. Unit testing and off-the-shelf analysers are there to handle the known unknowns. QA is about finding and fixing the unknown unknowns. They aren't gatekeepers or compliance maintainers, QA are an opportunity to put the software up against the opposition it faces in the real world without any of the consequences faced in the real world.

On the security side, I think this provides a means to get many more security researchers into companies that badly need them. There are probably companies who think they need QA more than security researchers. Having seen what some security teams and their managers think is the best use of their time, I'm kind of saddened. Sure, the company VPN servers need managing and creating compliance checklists is fine… but that leaves a lot of value on the table like the QA strategy I described above.

I think hiring and training for QASec is also feasible. From a security researcher prospective, it's mostly a rebrand of red teaming. To some QA has a fairly poor reputation unfortunately, so this role should focus on the technical competence and automation skills of those who do it. They are there to save the business time and money by remediating bugs and breaches that result in legal and reputational damages. Honestly, being good at security auditing tends to be a superset of what your average developer knows about the technology they work on anyway, so competence perception is likely to be quite high. I'd expect the people who fill this role would also serve as experts outside of direct workplace responsibilities and further improve employee training just from the conversations other developers are going to have with them.

On the QA side, learning a lot about security is a great way to get really good at finding issues. There's probably a lot to learn, but it's not insurmountable. It just means learning a lot about how a variety of software systems work at a fundamental level (something everyone would benefit from). On some level you get to work at becoming smarter than your average developer. Security theory mostly consists of a wide variety of patterns that lead to ways the software can be made to do things it shouldn't. It's about problem solving to overcome layers of defense, and trying to think about things from a new prospective. It also takes looking beyond a single unit of code because vulnerabilities tend to present themselves around the interfaces different developers worked behind. This is because they didn't fully understand what the other side of that interface did or failed to account for the possibility of malice from beyond that interface.

QASec in the Organisation

I'm not going to pretend like I know how this role fits best into your organisation. I can however take a swing at a few possible examples and then hope others share their experience on the subject over time.

The first constraint is the cross-boundary nature of the role. As I mentioned, it is at the interfaces (sometimes called abstractions) that many problems exist. This would imply an incorrect structure would be embedding someone within a single team. While QASec people are likely to work with teams prior to launch or at the planning stage of a project, it's also important that they be given boundary responsibilities. I might even consider a rotating assignment that looks at the boundaries between each pairing of teams within the organisation.

Another front is going to be automating compliance and verification. As I mentioned, great QAs tend to automate the trivial stuff. Empower your QA to automate. The role should encourage developing new lint rules, generalised unit tests, and implementing a culture of fuzz testing within the organisation. I do not mean QASec should write unit tests for developers. Developers can write their own unit tests. I mean beyond unit testing. No, not integration testing. I meant tests that catch issues QASec is routinely finding within the organisation. Tests that can be deployed across teams, code bases, etc. to catch things the developers seem to consistently miss.

In a similar spirit, I'd also see QASec working to develop libraries and tools for developers that implement best practices and facilitate doing the correct thing (instead of the existing strategy that leads to the wrong thing). An example would be how using React leads to a far lower incident rate of XSS attack vectors, or how using stored procedures dramatically reduces the likelihood of SQL injection attacks while improving database performance. These are a fantastic improvement to both developer productivity and business risk profile that can be achieved when QASec build in order to address an issue.

I'd focus on developing fuzzers. In Prof. Barton Miller and colleagues' 2020 paper The Relevance of Classic Fuzz Testing: Have We Solved This One?, they again looked at just standard command line utilities on MacOS, Linux, and FreeBSD and found 12-19% of these widely used programs would crash on random input. These crashes can be leveraged into exploits. This work began because in 1988, Prof. Barton Miller had students generate and send random input to command line tools and found 25-33% of those widely used programs would crash. In 30 years the problem has at best gotten 50% better. Fuzzing is fairly easy once you learn how to do it. It just takes time to implement and a desire to really find where things break.

As a final broader thought, I might make QASec responsible for system measurement in general within the organisation. Software has been getting measurably slower over time despite the almost unimaginable performance improvements hardware manufactures have been able to deliver over the same time. Data systems often have demonstrably incorrect behaviour that can lead to corruption or complete loss. I might consider tasking QASec with starting a culture of empirical system measurement. Many software organisations don't do what I'd consider basic system design in requiring metric performance on things like latency or throughput. To bootstrap that, I might lean on QASec.

Summary

Combine security researchers and QA. Both are needed and the skills of each are more aligned than development is with either. There's a great need for security research in businesses that may be best obtained by rebranding the concept of QA. I'm also not going to lie, it's kind of fun to say Sec-Q-A-Team.